An Artificial Neural Network (ANN) is a computational model that is inspired by the way biological neural networks in the human brain process information. Artificial Neural Networks have generated a lot of excitement in Machine Learning research and industry, thanks to many breakthrough results in speech recognition, computer vision and text processing. In this blog post I will try to develop an understanding of Artificial Neural Networks and show you the practical project of ANN that I have worked on it. I compared different architecture of ANN.

A Single Neuron

The basic unit of computation in a neural network is the neuron, often called a node or unit. It receives input from some other nodes, or from an external source and computes an output. Each input has an associated weight (w), which is assigned on the basis of its relative importance to other inputs. The node applies a function f (defined below) to the weighted sum of its inputs as shown in Figure 1 below:

The above network takes numerical inputs X1 and X2 and has weights w1 and w2 associated with those inputs. Additionally, there is another input 1 with weight b (called the Bias) associated with it. We will learn more details about role of the bias later.

The output Y from the neuron is computed as shown in the Figure 1. The function f is non-linear and is called the Activation Function. The purpose of the activation function is to introduce non-linearity into the output of a neuron. This is important because most real world data is non linear and we want neurons to learn these non linear representations.

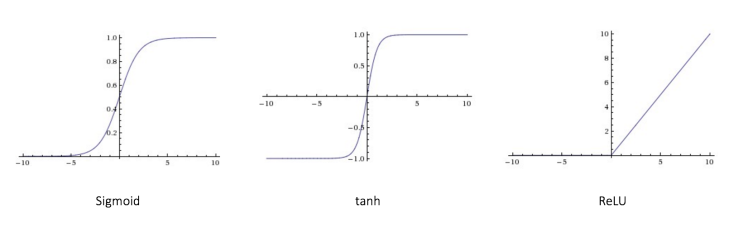

Every activation function (or non-linearity) takes a single number and performs a certain fixed mathematical operation on it [2]. There are several activation functions you may encounter in practice:

Sigmoid: takes a real-valued input and squashes it to range between 0 and 1

σ(x) = 1 / (1 + exp(−x))

tanh: takes a real-valued input and squashes it to the range [-1, 1]

tanh(x) = 2σ(2x) − 1

ReLU: ReLU stands for Rectified Linear Unit. It takes a real-valued input and thresholds it at zero (replaces negative values with zero)

f(x) = max(0, x)

The below figures [2] show each of the above activation functions.

A feedforward neural network can consist of three types of nodes:

- Input Nodes – The Input nodes provide information from the outside world to the network and are together referred to as the “Input Layer”. No computation is performed in any of the Input nodes – they just pass on the information to the hidden nodes.

- Hidden Nodes – The Hidden nodes have no direct connection with the outside world (hence the name “hidden”). They perform computations and transfer information from the input nodes to the output nodes. A collection of hidden nodes forms a “Hidden Layer”. While a feedforward network will only have a single input layer and a single output layer, it can have zero or multiple Hidden Layers.

- Output Nodes – The Output nodes are collectively referred to as the “Output Layer” and are responsible for computations and transferring information from the network to the outside world.

In a feedforward network, the information moves in only one direction – forward – from the input nodes, through the hidden nodes (if any) and to the output nodes. There are no cycles or loops in the network [3] (this property of feed forward networks is different from Recurrent Neural Networks in which the connections between the nodes form a cycle).

Backpropagation in Neural Networks

A neural network propagates the signal of the input data forward through its parameters towards the moment of decision, and then backpropagates information about the error through the network so that it can alter the parameters one step at a time.

You could compare a neural network to a large piece of artillery that is attempting to strike a distant object with a shell. When the neural network makes a guess about an instance of data, it fires, a cloud of dust rises on the horizon, and the gunner tries to make out where the shell struck, and how far it was from the target. That distance from the target is the measure of error. The measure of error is then applied to the angle of and direction of the gun (parameters), before it takes another shot.

Backpropagation takes the error associated with a wrong guess by a neural network, and uses that error to adjust the neural network’s parameters in the direction of less error and update all the parameters (Weights and biases parameters) to reduce the error of prediction.

ANN Project on Iris DataSet

I have done ANN Project on Iris dataset which contains 150 Iris flowers with 3 distinct classes. I compared different networks and solving the best value for hyper parameters like learning rate value and finding the best data ratio for training, validation and test in order to find the best optimum method for classifying IRIS dataset.

References

Comments